Updated: September 2016

To inform the Open Philanthropy Project’s investigation of potential risks from advanced artificial intelligence, and in particular to improve our thinking about AI timelines, I (Luke Muehlhauser) conducted a short study of what we should learn from past AI forecasts and seasons of optimism and pessimism in the field.

1 Key impressions

In addition to the issues discussed on our AI timelines page, another input into forecasting AI timelines is the question, “How have people predicted AI — especially HLMI (or something like it) — in the past, and should we adjust our own views today to correct for patterns we can observe in earlier predictions?”1 We’ve encountered the view that AI has been prone to repeated over-hype in the past, and that we should therefore expect that today’s projections are likely to be over-optimistic.

To investigate the nature of past AI predictions and cycles of optimism and pessimism in the history of the field, I read or skim-read several histories of AI2 and tracked down the original sources for many published AI predictions so I could read them in context. I also considered how I might have responded to hype or pessimism/criticism about AI at various times in its history, if I had been around at the time and had been trying to make my own predictions about the future of AI.

Some of my findings from this exercise are:

- The peak of AI hype seems to have been from 1956-1973. Still, the hype implied by some of the best-known AI predictions from this period is commonly exaggerated. [More]

- After ~1973, few experts seemed to discuss HLMI (or something similar) as a medium-term possibility, in part because many experts learned from the failure of the field’s earlier excessive optimism. [More]

- The second major period of AI hype, in the early 1980s, seems to have been more about the possibility of commercially useful, narrow-purpose “expert systems,” not about HLMI (or something similar). [More]

- The collection of individual AI forecasts graphed here is not very diverse: about 70% of them can be captured by three categories: (1) the earliest AI scientists, (2) a tight-knit group of futurists that emerged in the 1990s, and (3) people interviewed by Alexander Kruel in 2011-2012. [More]

- It’s unclear to me whether I would have been persuaded by contemporary critiques of early AI optimism, or whether I would have thought to ask the right kinds of skeptical questions at the time. The most substantive critique during the early years was by Hubert Dreyfus, and my guess is that I would have found it persuasive at the time, but I can’t be confident of that. [More]

I can’t easily summarize all the evidence I encountered that left me with these impressions, but I have tried to collect many of the important quotes and other data below. Then, in a final subsection, I summarize some questions I might have investigated if I had more time.

I would be curious to learn whether people who read a set of sources similar to the set I consulted come away from that exercise with roughly the same impressions I have. I would also be curious to hear how many AI scientists who were active during most of the history of the field share my impressions.

2 The peak of AI hype

The histories I read left me with the impression that some (but not all) of the earliest AI researchers — starting around the time of the Dartmouth Conference in 1956 — thought HLMI (or something like it) might only require a couple decades of work.

For example, Moravec (1988) claims that John McCarthy founded the Stanford AI project in 1963 “with the then-plausible goal of building a fully intelligent machine in a decade” (p. 20).

In some cases, this optimism may have been partly encouraged by the hypothesis that solving computer chess might be roughly equivalent to solving AI in full generality. Feigenbaum & McCorduck (1983), p. 38, report:

These young [AI scientists of the 1950s and 60s] were explicit in their faith that if you could penetrate to the essence of great chess playing, you would have penetrated to the core of human intellectual behavior. No use to say from here that somebody should have paid attention to all the brilliant chess players who are otherwise not exceptional, or all the brilliant people who play mediocre chess. This first group of artificial intelligence researchers… was persuaded that certain great, underlying principles characterized all intelligent behavior and could be isolated in chess as easily as anyplace else, and then applied to other endeavors that required intelligence.

Another reason for early optimism might have been that some AI scientists thought it might be relatively easy to learn how the human mind worked. Newquist (1994), p. 69, reports:

The question that began to haunt [the earliest] AI researchers was, “If we don’t know how humans think, how can we get a machine to think like a human?” At first this seemed like a minor problem, one that most researchers believed would be overcome within a matter of years.

This impression of widespread over-optimism in the early days of the field is supported by some of the earliest published AI predictions:

- In a 1957 talk,3 AI pioneer Herbert Simon said: “there are now in the world machines that think, that learn, and that create. Moreover, their ability to do these things is going to increase rapidly until in a visible future the range of problems they can handle will be coextensive with the range to which the human mind has been applied.” It’s unclear what Simon meant by this, and he doesn’t provide an explicit timeline for machines that can handle problems “coextensive” with the range of problems faced by human minds, but Simon’s comment sounds optimistic that highly general AI systems would be built in the next few decades, especially when one reads this quote in the context of his other predictions from the same talk, for example that within 10 years a computer will “be the world’s chess champion” and “will write music that will be accepted by critics as possessing considerable aesthetic value.”

- In a 1959 report, the mathematician and cryptologist I.J. Good4 seems to have predicted not just HLMI but also an intelligence explosion within the next few decades: “My own guess for the time it will take to develop a really useful artificial brain is 20 years multiplied or divided by 1 1/2, if it is done during the next 100 years (which I think is odds on)… Once a machine is designed that is good enough, say at a cost of $100,000,000, it can be put to work on designing an even better machine. At this point an ‘explosion’ will clearly occur; all the problems of science and technology will be handed over to machines and it will no longer be necessary for people to work.” In 1962,5 Good provided a more explicit timeline for when an intelligence explosion would occur: “A baby is a very complicated ‘device’, a product of billions of years of evolution, but only a million of those years were spent in human form. Consequently our main problem is perhaps to program or build a machine with the latent intelligence of a small lizard, totally unable to play draughts. A small lizard is handicapped by having a small brain. If we could build a machine with the latent intelligence of a small lizard, then, at many times the expense, we could probably build one with that of a baby. With a further small percentage increase in cost we could reach the level of the baby Newton and better. We could then educate it and teach it its own construction and ask it to design a far more economical and larger machine. At this stage there would unquestionably be an explosive development in science, and it would be possible to let the machines tackle all the most difficult problems of science… For what it is worth, my guess of when all this will come to pass is 1978, and the cost of $10(8.7 ± 1.0).” In a 1965 article he added that he thought it was “more probable than not” that an “ultraintelligent machine” would be built “within the twentieth century,” defining an ultraintelligent machine as “a machine that can far surpass all the intellectual activities of any man however clever.” By 1970 he had pushed his timelines back, predicting an ultraintelligent machine would be built “c. 1994 ± 10” (p. 69).6

- In 1961, AI pioneer Marvin Minsky said:7 “I am very optimistic about the eventual outcome of the work on machine solution of intellectual problems. Within our lifetime machines may surpass us in general intelligence. I believe that Simon and his colleagues share this view.” (Note that Minsky was then 33 years old; he died in 2016.) Later, in 1967, he wrote: “We are now immersed in a new technological revolution concerned with the mechanization of intellectual processes. Today we have the beginnings: machines that play games, machines that learn to play games; machines that handle abstract — non-numerical — mathematical problems and deal with ordinary-language expressions; and we see many other activities formerly confined within the province of human intelligence. Within a generation, I am convinced, few compartments of intellect will remain outside the machine’s realm – the problem of creating ‘artificial intelligence’ will be substantially solved” (p. 2).8

On the other hand, the hype implied by some other AI predictions from this period is commonly exaggerated.

For example, one of the most commonly-quoted early AI forecasts is Herbert Simon’s 1960 prediction that “machines will be capable, within twenty years, of doing any work that a man can do.”9 But when read in context, it isn’t so clear that Simon was talking about HLMI (or something like it), given that he thought humans would retain comparative advantage over these machines in many areas:

The division of labor between man and automatic devices will be determined by the doctrine of comparative advantage: those tasks in which machines have relatively the greatest advantage in productivity over man will be automated; those tasks in which machines have relatively the least advantage will remain manual. I have done some armchair analysis of what this proposition means for predictions of the occupational profile twenty years hence. I do not have time to report this analysis in detail here, but I can summarize my conclusions briefly. Technologically, as I have argued earlier, machines will be capable, within twenty years, of doing any work that a man can do. Economically, men will retain their greatest comparative advantage in jobs that require flexible manipulation of those parts of the environment that are relatively rough — some forms of manual work, control of some kinds of machinery (e.g., operating earth-moving equipment), some kinds of nonprogrammed problem solving, and some kinds of service activities where face-to-face human interaction is of the essence. Man will be somewhat less involved in performing the day-to-day work of the organization, and somewhat more involved in maintaining the system that performs the work.

Another commonly-cited source of early AI hype is a 1970 article in Life magazine by Brad Darrach titled “Meet Shaky, the first electronic person.”10 Darrach writes:

Marvin Minsky… recently told me with quiet certitude: ‘In from three to eight years we will have a machine with the general intelligence of an average human being. I mean a machine that will be able to read Shakespeare, grease a car, play office politics, tell a joke, have a fight. At that point the machine will be able to educate itself with fantastic speed. In a few months it will be at genius level and a few months after that its powers will be incalculable.’ … When I checked Minsky’s prophecy with other people working on Artificial Intelligence, however, many of them said that Minsky’s timetable might be somewhat wishful — “give us 15 years,” was a common remark — but all agreed that there would be such a machine and that it could precipitate the third Industrial Revolution, wipe out war and poverty and roll up centuries of growth in science, education and the arts… Many computer scientists believe that people who talk about computer autonomy are indulging in a lot of cybernetic hoopla. Most of these skeptics are engineers who work mainly with technical problems in computer hardware and who are preoccupied with the mechanical operations of these machines. Other computer experts seriously doubt that the finer psychic processes of the human mind will ever be brought within the scope of circuitry, but they see autonomy as a prospect and are persuaded that the social impact will be immense.

However, there are many reasons to doubt the accuracy of Darrach’s reporting:

- McCorduck (2004), ch. 10, reports that “[Darrach’s article] was more than science fiction: the Al community feels it was victimized in this instance by outright lies.” She quotes Bert Raphael, “who had spent a lot of time with Darrach” for the article, as saying “We were completely taken in by [Darrach’s] sincerity and interest in what we were doing, and then he went off and wrote… as if he’d seen many things he never saw, and wrote about seeing Shakey going down the hall and hurrying from office to office, when in fact all the time he’d been here we were in the process of changing one computer system to another and never demonstrated anything for him. There were many direct quotes that were imaginary or completely out of context.” She also writes that “Marvin Minsky was so exercised that he wrote a long rebuttal and denial of quotations attributed to him.”11

- Similarly, Crevier (1993), p. 96, reports that “Minsky was quoted in the article as saying… ‘in from three to eight years we will have a machine with the general intelligence of an average human being.’ Upon seeing his statement in print, however, Minsky vehemently denied having made it and even issued a [letter] denouncing the article…”

- In an interview published as chapter 2 of Stork (1997), Minsky said Darrach’s quote attributed to him was “made up.” Reflecting back on his views at the time, Minsky says “I believed in realism, as summarized by John McCarthy’s comment to the effect that if we worked really hard, we’d have an intelligent system in from four to four hundred years.” Of course, it’s hard to know whether Minsky was accurately recalling his ~1970 expectations about AI progress. The earliest source I could find for Minsky endorsing McCarthy’s “realism” is a 1981 article,12 which says: “On the question of when [we might build a “truly intelligent machine”], Minsky quoted AI pioneer John McCarthy… who told one reporter that it could happen in “four to 400 years.” The earliest source I could find for McCarthy saying something similar is from a 1977 article13 in which McCarthy is quoted as saying that future AI work would require “conceptual breakthroughs,” clarifying that “What you want is 1.7 Einsteins and 0.3 of the Manhattan Project, and you want the Einsteins first. I believe it’ll take five to 500 years.” In a 1978 article,14 McCarthy is reported as saying that “domestic robots are anywhere from five to 500 years away.” McCathy and Minsky might have had similar views in 1970, but I can’t be sure given the sources available to me.

- Darrach’s article contains numerous unquestionable errors. Here are three examples: (1) Throughout the piece, Darrach refers to the Stanford robot Shakey as “Shaky,” even though (as far as I know) its name had only ever been spelled “Shakey” in Stanford’s materials;15 (2) Darrach writes that “armed with the right devices and programmed in advance with basic instructions, Shaky could travel about the moon for months at a time and, without a single beep of direction from the earth, could gather rocks, drill cores, make surveys and photographs and even decide to lay plank bridges over crevices he had made up his mind to cross,” but this is an absurd exaggeration: as Crevier (1993) noted on page 96, “In fact, [Shakey] could barely negotiate straight corridors!” (3) Darrach refers to Alan Turing as “Ronald Turing.”

Furthermore, it’s worth remembering that some quotes from the time are more conservative than the oft-quoted examples of early AI hype. For example, in a widely-read 1960 paper called “Man-Computer Symbiosis,” psychologist and computer scientist J.C.R. Licklider writes:

Man-computer symbiosis is an expected development in cooperative interaction between men and electronic computers. It will involve very close coupling between the human and the electronic members of the partnership. The main aims are 1) to let computers facilitate formulative thinking as they now facilitate the solution of formulated problems, and 2) to enable men and computers to cooperate in making decisions and controlling complex situations without inflexible dependence on predetermined programs. In the anticipated symbiotic partnership, men will set the goals, formulate the hypotheses, determine the criteria, and perform the evaluations. Computing machines will do the routinizable work that must be done to prepare the way for insights and decisions in technical and scientific thinking… A multidisciplinary study group, examining future research and development problems of the Air Force, estimated that it would be 1980 before developments in artificial intelligence make it possible for machines alone to do much thinking or problem solving of military significance. That would leave, say, five years to develop man-computer symbiosis and 15 years to use it. The 15 may be 10 or 500, but those years should be intellectually the most creative and exciting in the history of mankind.

Moreover, there were always many who did not take AI very seriously. For example, AI scientist Drew McDermott wrote in 1976 that:

As a field, artificial intelligence has always been on the border of respectability, and therefore on the border of crackpottery. Many critics… have urged that we are over the border.

I discuss the writings of some of these critics in a later section.

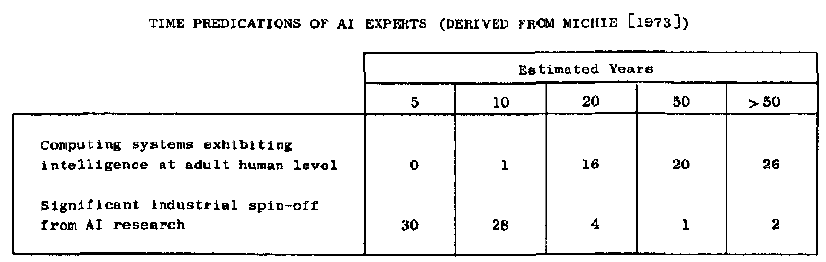

What about surveys of experts during this early period? As mentioned here, the earliest survey we have is from 1972, reported in Michie (1973) as a survey of “sixty-seven British and American computer scientists working in, or close to, the machine intelligence field.” The results of that survey, as presented more readably in Firschein et al. (1973),16 were:

Thus, 27% of respondents thought that “computing systems exhibiting intelligence at [the] adult human level” would be built in 20 years or less, 32% of respondents thought they’d be built in 20-50 years, and 42% of respondents thought they’d be built in more than 50 years.17 Unfortunately, respondents don’t seem to have been asked for their confidence levels.

I am not aware of another expert survey of timelines to HLMI (or something like it) until 2005 (see here).18

3 The first AI winter and after

Some AI histories say the first “AI winter” began near 1973, when DARPA funding for AI research decreased19 and James Lighthill published the “Lighthill report” that “formed the basis for the decision by the British government to end support for AI research in all but two universities.”20

Other sources paint somewhat different pictures. For example:

- The history of AI in chapter 1 of Russell & Norvig (2010) describes the years 1952-1969 with the section title “Early enthusiasm, great expectations,” and describes the overlapping years of 1966-1973 with the section title “A dose of reality.” The 1966 event they point to is an advisory committee report which found that “there has been no machine translation of general scientific text, and none is in immediate prospect.” As a result, Russell & Norvig write, “all U.S. government funding for [machine] translation projects was cancelled.” In addition to that report and the Lighthill report, they also point to a 1969 book by Marvin Minsky and Seymour Papert which proved certain negative results about the capabilities of simple versions of neural networks. Russell and Norvig say that as a result, “research funding for neural-net research soon dwindled to almost nothing.”

- In contrast, Nilsson (2009), ch. 21, considers 1975 to be the beginning of AI’s “boomtimes”: “Even though the Mansfield Amendment [requiring DARPA to fund mission-oriented research rather than more basic research] and the Lighthill Report caused difficulties for basic AI research during the 1970s, the promise of important applications sustained overall funding levels from both government and industry. Excitement, especially about expert systems, reached a peak during the mid-1980s. I think of the decade of roughly 1975–1985 as ‘boomtimes’ for AI.”

- McCorduck (2004) reports skepticism from at least one lab as having come later than 1974, at least in the memory of one participant: “Danny Hillis recalled visiting the MIT Al Lab as a freshman in 1974, and finding Al in a state of ‘explosive growth.’ Could general-purpose intelligence be far off’? By the time he joined the Lab as a graduate student a few years later, ‘the problems were looking more difficult. The simple demonstrations turned out to be just that. Although lots of new principles and powerful methods had been invented, applying them to larger, more complicated problems didn’t seem to work.’”

Regardless of the details, the sources I read all seemed to agree that by the early-to-mid 1970s, if not sooner, most AI researchers had begun to realize how difficult HLMI was likely to be, and to guard against over-optimistic predictions of AI progress.

For example, Crevier (1993) writes (in the preface):

While [the] AI experiments of the 1960s and early 1970s were fun to watch…, it soon became clear that the techniques they employed would not be useful apart from dealing with carefully simplified problems in restricted areas.

On page 196, Crevier adds:

At the end of the 1970s, artificial intelligence [was] now older and wiser… On the practical side, however, the intelligent machine seemed no closer than at the end of the 1960s.

Nilsson (1995) seems to agree: “Sometime during the 1970s, AI changed its focus from developing general problem-solving systems to developing expert programs.” One reason for this change of focus, he says, was that “the problem of building general, intelligent systems” was “very hard.”

For additional examples of AI scientists realizing in the early-to-mid 1970s that AI progress would be much harder than they initially thought, see chapter 5 of Crevier (1993).

Overall, my sense is that the undeniable failure of early AI hype was no secret, and stuck with the field thereafter. For example, Crevier (1993), p. 144, says:

AI researchers… appear… to have learned to guard against exaggerating the accuracy of their current theories, as the founders of AI did in their 1960s forecasts. Older researchers progressively realized in the 1970s that their schedule had been way ahead of reality. When AI later found its second wind [in the early 80s], some of them, as we’ll see, cautioned their younger colleagues against overclaiming.

4 The second wave of hype

By the early 1980s, some narrowly-focused “expert systems” achieved commercial viability and inspired increased investment in the field of AI. Russell & Norvig summarize:21

The first successful commercial expert system, R1, began operation at the Digital Equipment Corporation… The program helped configure orders for new computer systems; by 1986, it was saving the company an estimated $40 million a year. By 1988, DEC’s AI group had 40 expert systems deployed, with more on the way. DuPont had 100 in use and 500 in development, saving an estimated $ 10 million a year. Nearly every major U.S. corporation had its own AI group and was either using or investigating expert systems.

In 1981, the Japanese announced the “Fifth Generation” project, a 10-year plan to build intelligent computers… In response, the United States formed the Microelectronics and Computer Technology Corporation (MCC) as a research consortium designed to assure national competitiveness. In both cases, AI was part of a broad effort, including chip design and human-interface research. In Britain, the Alvey report reinstated the funding that was cut by the Lighthill report…

Overall, the AI industry boomed from a few million dollars in 1980 to billions of dollars in 1988, including hundreds of companies building expert systems, vision systems, robots, and software and hardware specialized for these purposes.

So was this wave of hype about HLMI (or something like it), or was it merely about building commercially viable but quite narrow expert systems? Though the evidence is mixed, I’m inclined toward the latter interpretation.

For example, Crevier recalls his encounter with the early 80s hype this way:22

In the early 1980s… I read about the next breakthrough — expert systems. While broad-based artificial intelligence might still be many years away, the idea that one could create systems that captured the decision-making processes of human experts, even if those systems operated only on narrowly focused tasks, suddenly seemed on the horizon or, at most, just over it.

One source from the time displaying HLMI-esque hype is a 1982 article in Fortune,23 which opens with these words: “Endowing a computer with genuine intelligence would rank in importance with the Industrial Revolution… Recent developments raise the question of whether researchers in ‘artificial intelligence’ are on the threshold of a breakthrough…” However, my guess is that this was basically just an unfounded “hook” for the article, since the rest of the article focuses on the challenges of getting even very simple, narrow AI programs to work, and concludes with a section titled “Exaggeration’s whipsaw”:

In the past, AI has often succumbed to the “first-step fallacy” — the assumption that success with a simple system is the first step to a practical, intelligent machine. What people have usually uncovered when they tried to take the next step were the prohibitive economics of the combinatorial explosion… even with their new theoretical insights, most AI researchers are cautious and concerned that once again the state of excitement about AI exceeds the state of the art. “We’re being whipsawed by our history,” says [Patrick] Winston. “In the days when it was difficult to get funding for AI, we found ourselves locked into ever-increasing exaggeration. I’m worried that the result of a fad will be romantic expectations that, unfulfilled, will lead to great disappointment, as happened before.”

Quite a few breakthroughs and many more years of work will be necessary before the overall quality of computer “thought” rises above the idiot level…

A 1984 Fortune article24 by the same author adds:

Expert systems… are suddenly the biggest technology craze since genetic engineering… But quite a few computer scientists… think that enthusiasm for the present technology is out of hand… Minsky and Schank contend that today’s systems are largely based on 20-year-old programming techniques that have merely become practical as computer power got cheaper. Truly significant advances in computer intelligence, they say, await future breakthroughs in programming.

Expert systems that can routinely outperform human experts will be much rarer than the hoopla suggests… proponents of expert systems envision scenarios in which, for example, various medical specialists would pool their expertise in a diagnostic system that would prove superior to any doctor. Another promised fantasy is an “information fusion” system that will automatically gather, synthesize, and analyze information from many sources and then offer up conclusions and advice… Such developments almost certainly are years and years away…

Despite their origins in artificial intelligence research, expert systems have no more special claim to “intelligence” than many conventional computer programs. In fact, expert systems display fewer of the attributes classically associated with intelligence, such as the ability to learn or to discern patterns amid confusion, than code-breaking and decision-aiding programs that first emerged during World War II…

The kinds of applications for which expert systems are likely to improve on human experts are very specialized and very few.

Indeed, the term “AI winter” comes not from outside the field, but instead was introduced by AI researchers near the peak of the 1980s hype, in a 1984 panel discussion25 in which senior AI scientists urged the field to remember earlier over-optimistic predictions and avoid over-hyping the pace of AI progress. The moderator of the panel, Drew McDermott, opened the discussion this way:

In spite of all the commercial hustle and bustle around AI these days, there’s a mood that I’m sure many of you are familiar with of deep unease among AI researchers who have been around more than the last four years or so. This unease is due to the worry that perhaps expectations about AI are too high, and that this will eventually result in disaster… it is important that we take steps to make sure [an] “AI Winter” doesn’t happen — by disciplining ourselves and educating the public.

Another source of evidence about whether the hype of the early 1980s was about HLMI (or something like it) is the collection of AI predictions discussed here. As you can see in the graph of AI forecasts reproduced in that section, the research project resulting in that collection of forecasts found only three published individual predictions of HLMI (or something like it) between 1971 and 1992: two by roboticist and futurist Hans Moravec, and one by AI scientist David Waltz.26 All three forecasts were derived from attempts to extrapolate trends in computer power to the point when we might have something resembling (their estimates of) the computing power of the human brain, and do not seem very related to contemporaneous hype about expert systems.

Overall, then, my sense is that the hype of the early 1980s was primarily about the possibility of commercially viable, narrow-purpose expert systems, not about the medium-term possibility of HLMI.

5 Four categories of published AI forecasts

As I show in a spreadsheet,27 the 65 individual AI forecasts in the collection of forecasts graphed here can be grouped into four categories that I find reasonably intuitive:

- 5 are the pre-1971 forecasts of the earliest AI scientists.

- 18 are the forecasts of a relatively tight-knit group of futurists that emerged in the 1990s.

- 23 are the forecasts of those interviewed by Alexander Kruel in 2011-2012.

- 29 are “other.”

Categories (2) and (3) require some explaining.

As for (2): The people I labeled “90s futurists” gathered on mailing lists such as Extropy Chat and SL4 to discuss topics such as transhumanism and the technological singularity, and those that didn’t engage directly with those mailing lists nevertheless spoke or corresponded regularly with several of those who did.

As for (3): The forecasts extracted from a series of interviews by transhumanist Alexander Kruel came from a more diverse group of people, including e.g. Fields medalist Timothy Gowers, early AI scientist Nils Nilsson, future DeepMind co-founder Shane Legg, historian Richard Carrier, and neurobiologist Mark Changizi.28 While this is a diverse set of people, it is tempting to treat the interview series as a survey, since these respondents were asked more-or-less the same questions, all within a relatively narrow window of time (2011-2012).

My conclusion from this is that the individual HLMI forecasts collected thus far are not particularly diverse. They may also represent a heavily biased sample, for example because most of these 65 forecasts were easy to find by the people conducting the search. The original collection of forecasts was assembled by contractors working with MIRI, which created the LessWrong.com website to which most of Kruel’s interviews were published, and which was co-founded by one of the “90s futurists” (Eliezer Yudkowsky) who participated heavily in the Extropy Chat mailing list.29 If the people conducting the search for published HLMI forecasts had participated in different social networks (for example, the machine learning community), perhaps they would have uncovered a fairly different set of published forecasts.30

I see all this as one more reason not to draw any strong conclusions from patterns observed in the distribution of individual AI forecasts collected thus far.

6 Early potential sources of skepticism about rapid AI progress

If I had been around in the 1950s and 60s and tried to forecast HLMI at the time, what sources of skepticism about rapid AI progress would I have had access to? In this subsection I discuss several early sources and potential sources of skepticism about the likelihood of rapid progress toward HLMI (or something like it).31

6.1 Taube

One of the earliest critiques of AI is Taube (1961), by the librarian Mortimer Taube. I don’t think I would have found Taube’s book persuasive at the time.

Taube’s first major argument (e.g. see pp. 14, 20) is that machine intelligence is incompatible with Gödel’s first incompleteness theorem, but he never seems to answer the potential rejoinder that if the human brain exhibits intelligent functioning without violating Gödel’s first incompleteness theorem, then why should we expect that a machine cannot?32

Taube’s second major argument (starting on p. 33) is that translation between human languages requires intuition because there is no one-to-one mapping between elements found in different languages, and computers can’t exhibit intuition. It’s possible to charitably interpret this as similar to some of Dreyfus’ more detailed and successful arguments (see below), but I don’t think I would have found it persuasive at the time, because Taube doesn’t provide much in the way of argument that computers can’t exhibit something like intuition, except when he (improperly) leans once again on Gödel’s first incompleteness theorem (p. 34).

I was unable to follow the logic of Taube’s fourth chapter (at least, not in a single reading). Taube seems to argue that machine learning is impossible, but then he admits that some AI programs already exist which learn to play games such as checkers.

I didn’t read the book any further, because the first four chapters were so unpersuasive.

6.2 Hubert Dreyfus

A more famous of criticism of AI was philosopher Hubert Dreyfus’ infamous RAND report “Alchemy and Artificial Intelligence” (1965), later expanded into the book What Computers Can’t Do (1972).

The secondary sources I read told conflicting stories about Dreyfus’ early critiques of AI. Some sources (e.g. ch.8 of McCorduck 2004) left me with the impression that Dreyfus made poor arguments, was badly misinformed about the state of AI, and was rightly ignored by the AI community. Other sources (e.g. Crevier, Newquist, and Armstrong et al.33) instead suggest that Dreyfus’ critiques were correct on many counts, in ways that looked somewhat impressive in hindsight, though he sometimes overstated some things, and he took an unhelpful tone about it all.

I’ve been vaguely aware of these two different positions on Dreyfus for the last few years, and before I read Dreyfus (1965) for myself, I suspected the former camp was right because I didn’t feel optimistic about the likely value of a critique of AI from a continental philosopher who wrote his dissertation on Heidegger.

But now having read “Alchemy and Artificial Intelligence” for this investigation, I find myself firmly in the latter camp. It seems to me that Dreyfus’ 1965 critiques of 1960s AI approaches were largely correct, for roughly the right reasons, in a way that seems quite impressive in hindsight.34

Depending on future priorities, I might later add another section to this page which describes in some detail why I find Dreyfus (1965) impressive in hindsight, and why I might have found it persuasive at the time. For now, I only have the time to report my impressions and recommend that readers read Dreyfus (1965) for themselves. Since many sources badly misrepresent the claims and arguments in Dreyfus (1965), it is important not to rely exclusively on secondary sources that describe or respond to it.

Overall, my guess is that I would have found Dreyfus’ critique quite compelling at the time, if I had bothered to read it. Dreyfus’ arguments are inspired by his background in phenomenological philosophy, but he expresses his arguments in clear and straightforward language that I think would have made sense to me at the time even if I had never read any phenomenological philosophy (which indeed, I have not). Moreover, the AI community hardly responded to Dreyfus’ critique at all35 — and when they did, they often misrepresented his claims and arguments in ways that are easy to detect if one merely checks Dreyfus’ original report.36

But of course it is hard to know such a thing with any certainty. Perhaps I wouldn’t have understood Dreyfus’ arguments at the time or found them compelling. (I will never know what the experience of reading “Alchemy and Artificial Intelligence” is like without the benefit of knowing how the next 50 years of AI played out.) Or perhaps I would have initially found Dreyfus’ arguments compelling but then concluded they must be wrong in subtle or technical ways that I couldn’t detect, because the experts in the field didn’t seem to think they were good arguments. Or perhaps I would have dismissed his arguments due to his frequent sloppiness with language (e.g. he often says that such-and-such is impossible for a “digital computer,” but elsewhere in the report he makes clear that what he means is that such-and-such is impossible for a digital computer programmed using GOFAI techniques — on the other hand, narrower uses of terms such as “digital computer” were hardly unique to Dreyfus at the time, e.g. see Shannon’s comments quoted below). Or perhaps I wouldn’t have read Dreyfus (1965) at all, because the idea of a continental philosopher critiquing prestigious computer scientists wouldn’t have sounded like a promising thing to look into.

6.3 How many early AI scientists were skeptical of rapid progress?

The vast majority of early publications in the field of AI say nothing at all about how quickly the authors expect the field to progress toward HLMI (or something like it). It’s possible that most early AI scientists were more skeptical of rapid progress than Simon and Minsky, but those who were skeptical were less motivated to write explicitly than those who were optimistic.

Dreyfus (1965), p. 75, does name one AI scientist who seemed skeptical that the techniques of the 1960s could lead to HLMI: Claude Shannon, the “father of information theory” and a participant in the Dartmouth Conference. Dreyfus points to Shannon’s comments at a 1961 panel discussion:37

A mathematician friend of mine, Ed Moore, …once showed me a chart on which he had placed many of the scientists working with computers. The chart formed a kind of spectrum, ordered according to how much one believed computing machines were like the human brain or could be said to perform intelligent thought. On the far right were those who view computers as merely glorified slide rules useful only in carrying out computation, while at the far left were scientists who feel computers are already doing something pretty close to thinking. Moore had placed me… “a little left of center.” I think Dr. Pierce [who delivered his comments a few minutes earlier] has taken a very sound position quite close to dead center. Many of the differences between people along this spectrum relate, I think to a lack of careful distinction between computers as we now have them for working tools, computers as we are experimenting on them with learning and artificial-intelligence programs, and computers as we visualize them in the next or later generations.

…I should like to explore a bit further the differences between computers as we have them today and the human brain. On the one hand, we have the fact that is often pointed out that computing machines can do anything which can be described in detail with a finite set of instructions. A computer is a universal machine in the sense of Turing: It can imitate any other machine. If the human brain is a machine, a computer with access to sufficient memory can, in principle, exactly imitate the human brain. There are, however, two strings on this result. One is that no mention is made of time relations, and, in general, one machine imitating another is greatly slowed up by the mechanics of describing one machine in terms of the second. The second proviso is even more important. One machine can imitate another or carry out a computing operation only if one can describe exactly, and in all detail, the first machine or the desired computing operation. Of course, this we cannot do for the human brain. We do not know the circuitry in detail or even the operation of the individual components.

As a result of these and other considerations, our attempts to simulate thought processes in computers have so far met with but qualified success. As Dr. Pierce has pointed out, there are some things which are easy for computers to do, operations involving long sequential numerical calculations, and others which are unusually difficult and cumbersome. These latter include, in particular, such areas as pattern recognition, judgment, insight, and the like.

…I believe that… there is very little similarity between the methods of operation of [present-day] computers and the brain. Some of the apparent differences are the following. In the first place, the wiring and circuitry of the computers are extremely precise and methodical. A single incorrect connection will generally cause errors and malfunctioning. The connections in the brain appear, at least locally, to be rather random, and even large numbers of malfunctioning parts do not cause complete breakdown of the system. In the second place, computers work on a generally serial basis, doing one small operation at a time. The nervous system, on the other hand, appears to be more of a parallel-type computer with a large fraction of neurons active at any given time. In the third place, it may be pointed out that most computers are either digital or analog. The nervous system seems to have a complex mixture of both representations of data.

These and other arguments suggest that efficient machines for such problems as pattern recognition, language translation, and so on, may require a different type of computer than any we have today. It is my feeling that this computer will be so organized that single components do not carry out simple, easily described functions. One cannot say that this transistor is used for this purpose, but rather that this group of components together performs such and such function. If this is true, the design of such a computer may lead us into something very difficult for humans to invent and something that requires very penetrating insights… I know of very few devices in existence which exhibit this property of diffusion of function over many components…

If this sort of theoretical problem could be solved within the next few years, it appears likely that we shall have the hardware to implement it. There is a great deal of laboratory work in progress in the field of microminiaturization… We are almost certain to have components within a decade which reduce current transistor circuits in size much as the transistor and ferrite cores reduced the early vacuum-tube computers. Can we design with these a computer whose natural operation is in terms of patterns, concepts, and vague similarities rather than sequential operations on ten-digit numbers? Can our next generation of computer experts give us a real mutation for the next generation of computers?

…

We can attempt to refine and improve what we have as much as possible… or we can take a completely different tack and say, “Are there other types of computing machines that will do certain things better than the types we now have?” I am suggesting that there well may be such machines.

Elsewhere, Shannon writes (in 1963):38

So far most of [early AI] work must be described as experimental with little practical application… This is an area in which there is a good deal of scientific wildcatting with many dry holes, a few gushers, but mostly unfinished drilling.

Language translation has attracted much attention… As yet results are only mediocre. It is possible to translate rather poorly and with frequent errors but, perhaps, sufficiently well for a reader to get the general ideas intended. It appears that for really first-rate machine translation the computers will have to work at a somewhat deeper level than that of straight syntax and grammar. In other words, they must have some primitive notion, at least, of the meaning of what they are translating. If this is correct, the next step forward is a rather formidable one and may take some time and significant innovation.

Shannon goes on to survey progress in several other domains, and generally sounds optimistic that future progress is possible, though doesn’t comment on how rapid we should expect that progress to be. Then he says:

The various research projects I have been discussing and many others of similar nature are all aimed at the general problem of simulating the humans or animal brain, at least from the behavioristic point of view, in a computing machine. One may divide the approaches to this problem into three main categories, which might be termed the logical approach, the [heuristic] approach, and the neurological approach. The logical approach aims at finding a… decision procedure which will solve all of the problems of a given class. This is typified by Wang’s program for theorem-proving in symbolic logic… [But] not all problems have available a suitable decision procedure. Furthermore, a decision procedure requires a deep and sophisticated understanding of the problem by the programer [sic] in all its detail.

The second method… is often referred to as heuristic programming… I believe that heuristic programming is only in its infancy and that the next ten or twenty years will see remarkable advances in this area…

The third or neurological approach aims at simulating the operation of the brain at the neural level rather than at the psychological or functional level. Although several interesting research studies have been carried out, the results are still open to much question as to interpretation… anyone attempting to construct a neural-net model of the brain must make many hypotheses concerning the exact operation of nerve cells and with regard to their cross-connections. Furthermore, the human brain contains some ten billion neurons [actually, it has 86 billion neurons], and the simulated nerve nets of computers at best contain a few thousand. [Earlier in the article, Shannon writes that “even if we had these ten billion circuit elements available, we would, by no means, know how to connect them up to simulate a brain.”] This line of research is an important one with a long-range future, but I do not expect too much in the way of spectacular results within the next decade or two unless, of course, there is a genuine break-through in our knowledge of neurophysiology.

With the explosive growth of the last two decades in computer technology, one may well ask what lies ahead. The role of the prophet is not an easy one. In the first place, we are inclined to extrapolate into the future along a straight line, whereas science and technology tend to grow at an exponential rate. Thus our prophecies, more often than not, are far too conservative. In the second place, we prophesy from past trends. We cannot foresee the great and sudden mutations in scientific evolution. Thus we find ourselves predicting faster railroad trains and overlooking the possibility of airplanes as being too fanciful.

…taking a longer-range view, I expect computers eventually to handle a large fraction of low-level decisions, even as now they take care of their own internal bookkeeping. We may expect them to… [replace] man in many of his semirote activities…

…Another problem often discussed is: What will we do if the machines get smarter than we are and start to take over? I would say in the first place, judging from the I.Q. level of machines now in existence and the difficulty of programming problems of any generality, that this bridge is a long way in the future, if we ever have to cross it at all.

Overall, my impression is that in the early 60s, Shannon did not think HLMI (or something like it) was plausible in the next few decades, and instead seemed to have in-hindsight reasonable expectations about the pace of future progress in the field. Moreover, I would likely have taken his views quite seriously at the time, given that his reputation at the time was likely stronger than that of e.g. Simon or Minsky.

Were other early AI scientists relatively skeptical about rapid future progress on AI — before, say, 1968?

It’s possible that John McCarthy was, depending on how early he was using something like the “5 to 500 years” line, and depending on how he was allocating his probability mass across that vast range. But as mentioned earlier, I was unable to find a source for that quote earlier than 1977. Moreover, we must consider Moravec (1988)’s comment that McCarthy founded the Stanford AI project in 1963 “with the then-plausible goal of building a fully intelligent machine in a decade,” and Nilsson (2009)’s report that at a 2006 conference, McCarthy reminisced that “the main reason the 1956 Dartmouth workshop did not live up to my expectations is that AI is harder than we thought.”39 Given all this, my guess is that McCarthy had expectations during the earliest period that were similar to those of Simon and Minsky.

I wasn’t able to find additional pessimistic forecasts from AI scientists during the time I devoted to this investigation.

6.4 How skeptical would I have been about AI scientists’ forecasting abilities in the 50s and 60s?

No doubt I would have been skeptical in general of confident forecasts about the future of AI. But would AI scientists’ early optimism about future rapid progress in their field have persuaded me to admit some substantial chance (say, 10%) that even their most grandiose predictions might prove accurate?

One consideration is that the 1950s and 60s were arguably the peak of “futurism.” Long-term forecasting was taken more seriously than it typically is today, and large amounts of government funding flowed into research institutes such as RAND, which invented the Delphi method for long-term forecasting and released perhaps the most celebrated early Delphi study in 1964. (For more on the history of futurism, see Bell 2010.)

Moreover, many later studies demonstrating the relatively poor performance of expert forecasters, such as Tetlock (2005), had not yet been conducted and published.

Overall, my guess is that in the 1950s and 60s I would have had less reason to dismiss predictions made by experts in a technical field than I do today, with the benefit of extensive scientific evidence about the difficulty of long-term forecasting and the limits of expertise.

Still, there was substantial diversity of opinion at the time (see e.g. Shannon’s comments about Ed Moore’s “spectrum,” quoted above), and Simon and Minsky and their most optimistic colleagues did not persuade everyone, or even most people, of their optimism.

One interesting example of this is an exchange in which McCarthy and Minsky tried and failed to change someone’s mind about the immediate prospects for AI progress. This exchange was captured in the 1961 panel discussion I quoted from above.40

First, John Pierce — then Executive Director of Research at Bell Labs — presented a lecture which opened with an admission that he is not an expert on computers:

When I was asked to deliver a lecture in this series, no one expected me to talk about computers. I am not an expert in the field, and I certainly could not hope to present… the inside story of computer design, programming, and simulation…

Pierce went on to discuss some limitations of early AI programs, laying out a view that Shannon shortly thereafter described as being a bit to the right of Shannon’s own position — meaning, somewhat more skeptical than Shannon of the degree to which computers are capable of “thinking.” (They were not discussing a philosophical point about “thinking” ala Searle, but instead were discussing their views about the practical contemporary and future capabilities of AI systems.)

During the general discussion period, John McCarthy (one of the foremost AI experts at the time) challenged Pierce that he should be more optimistic about the capability of machines than he is, saying:

I think what Dr. Pierce said concerning present computer applications is true, but I think his general picture of things is almost completely false. His picture is static. It is based on computers as they are today and on practices already obsolete in some cases…

Pierce began his reply with “I am not at all converted by what Dr. McCarthy has said…” and then responded with a long sequence of specific replies to McCarthy’s points, in which he seems not the least intimidated by McCarthy’s authority on the subject.

A bit later, Minsky again pushed an optimistic view:

In the last two years we find the IBM 704, compared to solving problems of an order of magnitude more difficult than it did in 1958 or 1959. Although I doubt it, it still remains possible to me that the IBM 704 with its crude serial mode of operation could be as intelligent as man in fairly broad areas, just provided it had a larger memory.

…Concerning pattern recognition, just as a specific matter, I should bet that the serial IBM 704, if properly programmed, could read printed letters faster than a human, even though present programs do not. I should bet that eventually the 704 even could read script faster than a human.

(For context, note that a Samsung Galaxy S6 smartphone is 2.9 million times faster, in FLOPS, than an IBM 704.)

Pierce replied:

These are nice bets, but how long do we have to wait? Will I live so long? …There is a wonderful tendency to talk about things that lie in the future and that [one] cannot prove will not happen. This is good clean fun because it is the only way we have of giving variety to the future. When the future comes there is going to be just one future, but here in anticipation we can enjoy a lot of different futures, and I am pleased to see that everyone is enjoying himself. As for me, I do not know what is going to happen, and I find the future more difficult to talk about…

Pierce’s final comment is a flippant joke about one’s ability to predict the future:

In the matter of making a decision now on the basis of an unknown future, I am reminded of a remark inadvertently made by Mr. Webb, Administrator of [NASA]… Mr Webb stated on television that “We have a step-by-step program in which each step is based on the next.”

This exchange provides a concrete illustration of the obvious point that even though a few prominent AI scientists made wildly unrealistic forecasts in the early years of AI, they were not necessarily successful at persuading others that their forecasts were realistic, including with at least one self-professed non-expert in AI.

6.5 Overall conclusions about contemporaneous sources of skepticism about early AI progress

Overall, my impression from what I’ve read is that I very well might have been swept up in the early AI hype if I had spent fewer than 15 hours familiarizing myself with the state of the field, and had mostly spoken with optimistic experts such as Simon and Minsky. But I very well might not have been swept up in their hype, just as John Pierce was not.

However, if I had spent more than 40 hours familiarizing myself with the state of the field, and with the aim of making a serious attempt to estimate the likely pace of future progress (e.g. as a research analyst for a major funder at the time, such as DARPA), I think it’s likely I would have come to views more similar to Shannon’s and Pierce’s than Simon’s and Minsky’s — especially if I had been investigating the field after 1965, and thus had a good chance of reading Dreyfus’ “Alchemy and Artificial Intelligence.” (Or, earlier, I might have encountered his brief skeptical comments in Greenberger (1962), pp. 321-322, and sought him out in person to learn more.)

7 What else might one investigate, on the topic of what we should learn from past AI predictions?

My investigation on what we can learn from past AI predictions was necessarily incomplete and non-systematic, and there are many potentially interesting threads I did not pursue, or did not pursue as deeply as I would if I had devoted more time to this topic. These include:

- A more thorough analysis of the degree to which Dreyfus (1965) and his later critiques of AI were correct or incorrect, and whether the AI community should have been able to recognize Dreyfus’ arguments as being (partially) correct at the time. (I might still pursue this investigation later, depending on future priorities.)

- A more thorough analysis of the degree to which the critiques of others, such as Taube and Lucas, had merit.

- How did the field’s funding level fluctuate in response to hype, pessimism, and other forces? The sources I read often included details on funding levels, but it was not part of my investigation to collect and synthesize that information.

- What do the earliest AI scientists (who are still alive) recall about their own expectations about AI progress at different points in the history of the field, and about the expectations of others in the field at those points in time?41

- Does anyone still possess a copy of Minsky’s 1970 “long rebuttal” to Brad Darrach? What does it say?

- Does anyone still possess a copy of Ed Moore’s “spectrum” drawing, mentioned by Shannon?

- A more thorough hunt for published forecasts of HLMI (or something like it). During my reading I encountered several qualifying forecasts that do not appear in the collection of forecasts graphed here, and I suspect further reading would uncover many more. Moreover, much of this dataset was assembled without access to primary sources, and I have often found discrepancies between primary and secondary sources on this topic, so one could check the accuracy of the forecasts represented in the current dataset by consulting the primary sources for its collected forecasts.

- My investigation here focused on forecasts, hype, and skepticism from the earliest years of AI. One could do a more thorough investigations of AI forecasts, hype, and pessimism in the 70s, 80s, 90s, and 2000s.

- How did university textbooks about AI evolve over time? One could order copies of every edition of every major AI textbook from the beginning of the field until today, and compare how they talked about different AI subtopics over time, how many pages were devoted to different sub-topics over time, what they said about which approaches seemed most promising for the future, etc.

- Perhaps the most substantial history of AI that I didn’t have time to consult for this investigation is

Boden (2006) (especially chs. 4, 10-13). Is Boden’s account consistent with the impressions I report here? What does her account add to what I’ve summarized here?

8 Sources

| DOCUMENT | SOURCE |

|---|---|

| AI Impacts, “MIRI AI Predictions Dataset” | Source (archive) |

| Muehlhauser, “AI Impacts predictions dataset with predictions categorized by Luke Muehlhauser” | Source |

| AI Impacts, “AI Timeline Surveys” | Source (archive) |

| Alexander (1984) | Source (archive) |

| Armstrong et al. (2014) | Source (archive) |

| Bell (2010) | Source (archive) |

| Bizony (2000) | Source (archive) |

| Boden (2006) | Source (archive) |

| Bostrom (2014) | Source (archive) |

| Brachman & Smith (1980) | Source (archive) |

| Buchanan (2005) | Source (archive) |

| Chess Programming Wiki, “James R. Slagle” | Source (archive) |

| Chess Programming Wiki, “Laveen Kanal” | Source (archive) |

| Computer History Museum, “Artificial Intelligence” | Source (archive) |

| ComputerWorld, December 21, 1981 | Source (archive) |

| Crevier (1993) | Source (archive) |

| Dreyfus (1965) | Source (archive) |

| Dreyfus (1972) | Source (archive) |

| Dreyfus (2006) | Source (archive) |

| Extropy Chat | Source (archive) |

| Feigenbaum & Feldman (1963) | Source (archive) |

| Feigenbaum & McCorduck (1983) | Source (archive) |

| Firschein et al. (1973) | Source (archive) |

| GiveWell blog, “Sequence Thinking vs. Cluster Thinking” | Source (archive) |

| Good (1959) | Source (archive) |

| Good (1962) | Source |

| Good (1965) | Source (archive) |

| Good (1970) | Source (archive) |

| Gordon & Helmer-Hirschberg (1964) | Source (archive) |

| Greenberger (1962) | Source (archive) |

| GSMArena, “Samsung Galaxy S6 is as powerful as 2.9M IBM 704 supercomputers from 1954, infographic reveals” | Source (archive) |

| Haugeland (1985) | Source (archive) |

| Herculano-Houzel (2009) | Source (archive) |

| Internet Encyclopedia of Philosophy, “The Lucas-Penrose Argument about Gödel’s Theorem” | Source (archive) |

| KQED Radio, “Audio Archives Search” | Source |

| LessWrong, “Interview series on risks from AI” | Source (archive) |

| LessWrong, “Model Combination and Adjustment” | Source (archive) |

| Licklider (1960) | Source (archive) |

| LIFE Magazine, November 20, 1970 | Source |

| Lighthill (1973) | Source (archive) |

| Lucas (1961) | Source (archive) |

| Luger (2009) | Source (archive) |

| Machine Intelligence Research Institute (MIRI) home page | Source (archive) |

| McCorduck (2004) | Source (archive) |

| McDermott (1976) | Source (archive) |

| McDermott et al. (1985) | Source (archive) |

| Michie (1973) | Source (archive) |

| Minsky (1967) | Source (archive) |

| Minsky & Papert (1969) | Source (archive) |

| Moravec (1975) | Source |

| Moravec (1977) | Source |

| Moravec (1988) | Source (archive) |

| Muehlhauser (2012) | Source (archive) |

| National Academies Press (1999) | Source (archive) |

| National Academy of Sciences Automatic Language Processing Advisory Committee Report (1966) | Source (archive) |

| Neisser (1963) | Source (archive) |

| Newquist (1994) | Source (archive) |

| Nilsson (1995) | Source (archive) |

| Nilsson (2009) | Source (archive) |

| Poole & Mackworth (2010) | Source (archive) |

| Russell & Norvig (2010) | Source (archive) |

| Shannon (1963) | Source (archive) |

| Simon (1960) | Source (archive) |

| Simon (1965) | Source (archive) |

| Simon & Newell (1958) | Source |

| SL4 | Source (archive) |

| Slidegur, “Artificial Intelligence: Chapter 12” | Source |

| SRI International’s Artificial Intelligence Center, “Shakey” | Source (archive) |

| Stanford Encyclopedia of Philosophy, “The Chinese Room Argument” | Source (archive) |

| Stork (1997) | Source (archive) |

| Taube (1961) | Source (archive) |

| Technical Report 98-11 of the Department of Statistics at Virginia Polytechnic Institute and State University | Unpublished |

| Tetlock (2005) | Source (archive) |

| Turing (1950) | Source |

| Waltz (1988) | Source |

| Wikipedia, “AI winter” | Source (archive) |

| Wikipedia, “Bernard Widrow” | Source (archive) |

| Wikipedia, “Bertram Raphael” | Source (archive) |

| Wikipedia, “Claude Shannon” | Source (archive) |

| Wikipedia, “Continental philosophy” | Source (archive) |

| Wikipedia, “Daniel G. Bobrow” | Source (archive) |

| Wikipedia, “Dartmouth Conferences” | Source (archive) |

| Wikipedia, “Delphi method” | Source (archive) |

| Wikipedia, “Edward F. Moore” | Source (archive) |

| Wikipedia, “Edward Feigenbaum” | Source (archive) |

| Wikipedia, “FLOPS” | Source (archive) |

| Wikipedia, “History of artificial intelligence” | Source (archive) |

| Wikipedia, “Intelligence explosion” | Source (archive) |

| Wikipedia, “Mortimer Taube” | Source (archive) |

| Wikipedia, “Nils John Nilsson” | Source (archive) |

| Wikipedia, “Patrick Winston” | Source (archive) |

| Wikipedia, “Peter E. Hart” | Source (archive) |

| Wikipedia, “Raj Reddy” | Source (archive) |

| Wikipedia, “RAND Corporation” | Source (archive) |

| Wikipedia, “Reference class forecasting” | Source (archive) |

| Wikipedia, “Richard Greenblatt (programmer)” | Source (archive) |

| Wikipedia, “Richard O. Duda” | Source (archive) |

| Wikipedia, “Rod Burstall” | Source (archive) |

| Wikipedia, “Seymour Papert” | Source (archive) |

| Wikipedia, “Shakey the robot” | Source (archive) |

| Wikipedia, “Symbolic artificial intelligence” | Source (archive) |

| Wikipedia, “Technological singularity” | Source (archive) |

| Wikipedia, “Terry Winograd” | Source (archive) |

| Wikipedia, “Transhumanism” | Source (archive) |

| Wikipedia, “Trenchard More” | Source (archive) |